Running Large Language Models or LLMs locally offers numerous advantages, including increased privacy, faster response times, and the ability to work offline. With powerful language models now very common, most people only get to interact with them through huge tech corporations like OpenAI which have massive clusters of hardware.

However, thanks to advances in model architectures and hardware, there are now many excellent open-source options that allow you to easily run very powerful LLMs and their variants completely locally on your hardware. This gives you full privacy and control over your data without needing to rely on large companies.

Here are 6 ways you can run LLms locally.

1. GPT4ALL – A Standout Option for Running LLMs Locally

Website: GPT4ALL

- Ease of Use: GPT4ALL offers a user-friendly browser interface that requires no installation.

- Model Range: Supports models ranging from 130 million to 6.7 billion parameters.

- Advantages: Easy installation, customization, small model size, developer support, and enhanced privacy.

GPT4All is a platform that allows users to interact locally with large language models through a focus on privacy. It ensures no data leaves users’ devices during model interactions. Straightforward instructions guide setting up and configuring models locally, giving users ML power while maintaining data security.

Key features of GPT4ALL:

- Easy installation: GPT4ALL provides installers for Windows, Mac, and Linux, making it accessible to non-technical users.

- Customization: Dataset curation tools like Atlas enable training customized GPT4ALL models for different use cases.

- Small size: GPT4ALL models are sized for consumer hardware, requiring no special configuration.

- Developer support: Python APIs, training frameworks, and data collection tools cater to technical users wanting to integrate GPT4ALL.

- Privacy: GPT4ALL doesn’t require an internet connection, ensuring conversation data stays local and maintains user privacy and control.

For both individuals getting started with LLMs and developers wanting to deploy AI locally, GPT4ALL checks all the boxes. It exemplifies the best practices for making natural language models accessible offline.

2. LM Studio – A Standout for Custom LLMs

Website: LM Studio

LM Studio is a user-friendly platform designed for running large language models locally. It offers a wide range of pre-trained models and provides a seamless experience for users to discover, download, and run these models on their own devices. LM Studio supports uses like summarization, Q&A, and chatbot development, making it versatile for leveraging model capabilities locally.

Key features of LM Studio include:

- Supports leading LLMs: Compatible with popular models like GPT-Neo, LLaMA, and HuggingFace repositories, making it easy to explore new models.

- User-friendly interface: Chat and API server enable intuitive interaction without coding for less technical users.

- Handles ML complexity: Data processing, training loops, and monitoring are handled seamlessly, allowing users to focus on innovation.

- Customizable workflows: Python APIs integrate into complex ML pipelines for advanced users. Custom models can be trained.

- Accessible ecosystem: Open source development and community make LM Studio approachable for diverse skill levels.

- Local runtime focus: Enables taking offline the capabilities of cutting-edge, large language models, aligning with the original article’s goals.

For users of any skill level, LM Studio lowers barriers to leveraging LLMs locally, positioning it as a premier on-edge runtime for natural language AI exploration. It’s a standout option among local LLM solutions.

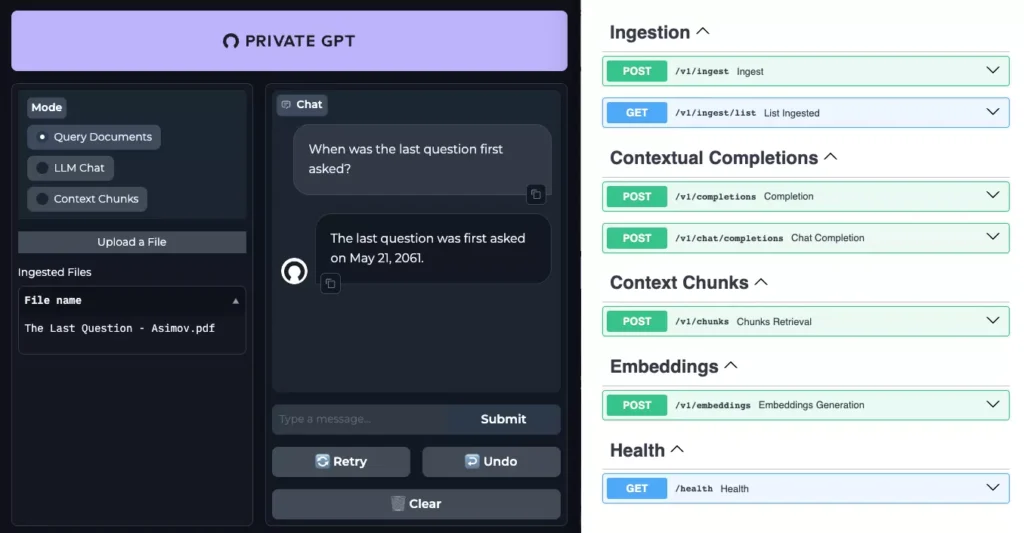

3. PrivateGPT – A Robust Option for Privacy-Preserving LLMs

Project: PrivateGPT

PrivateGPT is an open-source project hosted on GitHub that enables users to interact with their documents using GPT models locally. The project emphasizes privacy and data security, ensuring no data leaks during interactions. With detailed documentation and installation instructions, PrivateGPT allows harnessing GPT privately while maintaining full data control.

Key features include:

- Privacy-focused: No internet or data sharing is required, enabling the building of fully private AI applications.

- Production-ready: Modular FastAPI architecture with quality testing and active development.

- Extends OpenAI API: Familiar interface with document ingestion and RAG capabilities added.

- Active community: A thriving community with 38.7k stars, and the creator engages with users to guide development.

- Accessibility: UIs, scripts, and docs make using local LLMs approachable for diverse users.

- Customizable: Modular design allows adapting both API and RAG components.

- Gateway to local AI: The long-term goal is to provide building blocks for on-edge apps.

For any project requiring local generative AI with strong privacy, PrivateGPT checks all the boxes, making powerful LLMs attainable without compromising data control.

Zero-Shot Learning Explained: Teaching AI to Recognize Without Labels

4. Ollama – A Standout for Simplicity in Local Deployment

Link: Ollama

For those who seek the easiest and most maintenance-free solution, Anthropic’s Ollama is an excellent choice.

- Simplicity: Ollama offers a set-and-forget approach with a single command setup for massive models like GPT-Neo 20B.

- Accessibility: Both a clean web UI and Python API provide intuitive interaction.

- Performance: Internal acceleration and static memory optimizations boost performance.

Ollama is a GitHub repository providing resources and guidance for setting up large language models like Llama locally. The repository focuses on setting up Llama 2 and other models easily through step-by-step instructions. Ollama emphasizes user-friendliness, making it accessible even for non-technical users to run models locally.

- It offers guidance on setting up Llama 2 and other large language models on your local device.

- Ollama emphasizes ease of use and provides step-by-step instructions, making it accessible even for beginners.

- By following the instructions in the repository, you can have your LLM up and running in no time.

5. LocalGPT – Leading the Way in Innovation

Link: LocalGPT

localGPT enables private GPT conversations with local documents through chat, Q&A, and summarization. As an open-source project, it guarantees no data sharing and complements documentation with code examples. With comprehensive functionality, users can integrate and utilize local GPT for document tasks while ensuring 100% privacy.

- localGPT is an open-source project that enables you to chat with your documents on your local device using GPT models.

- It guarantees that no data leaves your device, ensuring 100% privacy.

- localGPT offers a comprehensive set of functionalities, including document chat, Q&A, and document summarization.

- The project provides detailed documentation and examples to help you integrate and utilize the LLM effectively.

LocalGPT materially surpasses peers through its focus on the future of LLMs, making it an avant-garde solution for boundary-pushers.

6. H2OGPT – A Champion for Cross-Platform Coverage

Link: H2OGPT

H2OGPT offers private Q&A and document summarization using local GPT models. The project provides demo sites and code repositories for easy exploration. It supports various models including Llama2 and ensures complete privacy with Apache licensing. Extensive documentation helps users effectively run local GPT models through H2OGPT.

- Cross-Platform: H2OGPT offers intuitive plug-and-play installers for Windows, Mac, and Linux.

- Compatibility: It supports a wide range of pre-trained language models through an actively maintained unified interface.

- True “set it and forget it” compatibility: H2OGPT leads as the only editorial selection delivering universal local LLM access seamlessly across all systems.

Conclusion:

In conclusion, these seven top solutions cover a diverse set of priorities, from simplicity to customization, empowering you to choose what aligns best with your unique context and needs. The future of personal human-AI partnership has never been more promising.

2 Responses